Khoj: Your AI Second Brain

Khoj is a personal AI app to extend your capabilities. It smoothly scales up from an on-device personal AI to a cloud-scale enterprise AI.

- Khoj is an open source, personal AI

- You can chat with it about anything. It'll use files you shared with it to respond, when relevant. It can also access information from the public internet.

- Quickly find relevant notes and documents using natural language

- It understands pdf, plaintext, markdown, org-mode files, notion pages and github repositories

- Access it from your Emacs, Obsidian, the Khoj desktop app, or any web browser

- Use our cloud instance to access your Khoj anytime from anywhere, self-host on consumer hardware for privacy

Features

Khoj supports a variety of features, including search and chat with a wide range of data sources and interfaces.

Search

- Local: Your personal data stays local. All search and indexing is done on your machine when you self-host

- Incremental: Incremental search for a fast, search-as-you-type experience

Chat

- Faster answers: Find answers faster, smoother than search. No need to manually scan through your notes to find answers.

- Iterative discovery: Iteratively explore and (re-)discover your notes

- Assisted creativity: Smoothly weave across answers retrieval and content generation

- Works online or offline: Chat using online or offline AI chat models

General

- Cloud or Self-Host: Use cloud to use Khoj anytime from anywhere or self-host for privacy

- Natural: Advanced natural language understanding using Transformer based ML Models

- Pluggable: Modular architecture makes it easy to plug in new data sources, frontends and ML models

- Multiple Sources: Index your Org-mode, Markdown, PDF, plaintext files, Github repos and Notion pages

- Multiple Interfaces: Interact from your Web Browser, Emacs, Obsidian, Desktop app or even Whatsapp

Supported Interfaces

Khoj is available as a Desktop app, Emacs package, Obsidian plugin, Web app and a Whatsapp AI.

Supported Data Sources

Khoj can understand your word, PDF, org-mode, markdown, plaintext files, Github projects and Notion pages.

Demo

Go to https://app.khoj.dev to see Khoj live.

Self-Host

Learn about how to self-host Khoj on your own machine.

Benefits to self-hosting:

- Privacy: Your data will never have to leave your private network. You can even use Khoj without an internet connection if deployed on your personal computer.

- Customization: You can customize Khoj to your liking, from models, to host URL, to feature enablement.

Setup Khoj

These are the general setup instructions for self-hosted Khoj. You can install the Khoj server using either Docker or Pip.

Offline Model + GPU: To use the offline chat model with your GPU, we recommend using the Docker setup with Ollama . You can also use the local Khoj setup via the Python package directly.

Restart your Khoj server after the first run to ensure all settings are applied correctly.

Prerequisites

Option 1: Click here to install Docker Desktop. Make sure you also install the Docker Compose tool.

Option 2: Use Homebrew to install Docker and Docker Compose.

brew install --cask docker

brew install docker-compose

Setup

Download the Khoj docker-compose.yml file from Github

mkdir ~/.khoj && cd ~/.khoj

wget https://raw.githubusercontent.com/khoj-ai/khoj/master/docker-compose.yml

Configure the environment variables in the docker-compose.yml

- Set KHOJ_ADMIN_PASSWORD, KHOJ_DJANGO_SECRET_KEY (and optionally the KHOJ_ADMIN_EMAIL) to something secure. This allows you to customize Khoj later via the admin panel.

- Set OPENAI_API_KEY, ANTHROPIC_API_KEY, or GEMINI_API_KEY to your API key if you want to use OpenAI, Anthropic or Gemini commercial chat models respectively.

- Uncomment OPENAI_API_BASE to use Ollama running on your host machine. Or set it to the URL of your OpenAI compatible API like vLLM or LMStudio.

Start Khoj by running the following command in the same directory as your docker-compose.yml file.

cd ~/.khoj

docker-compose up

Remote Access: By default Khoj is only accessible on the machine it is running. To access Khoj from a remote machine see Remote Access Docs.

Your setup is complete once you see 🌖 Khoj is ready to use in the server logs on your terminal.

Use Khoj

You can now open the web app at http://localhost:42110 and start interacting!

Nothing else is necessary, but you can customize your setup further by following the steps below.

First Message to Offline Chat Model: The offline chat model gets downloaded when you first send a message to it. The download can take a few minutes! Subsequent messages should be faster.

Add Chat Models

Login to the Khoj Admin Panel

Go to http://localhost:42110/server/admin and login with the admin credentials you setup during installation.

CSRF Error: Ensure you are using localhost, not 127.0.0.1, to access the admin panel to avoid the CSRF error.

DISALLOWED HOST or Bad Request (400) Error: You may hit this if you try access Khoj exposed on a custom domain (e.g. 192.168.12.3 or example.com) or over HTTP. Set the environment variables KHOJ_DOMAIN=your-domain and KHOJ_NO_HTTPS=True if required to avoid this error.

Configure Chat Model

Setup which chat model you'd want to use. Khoj supports local and online chat models.

Using Ollama? See the Ollama Integration section for more custom setup instructions.

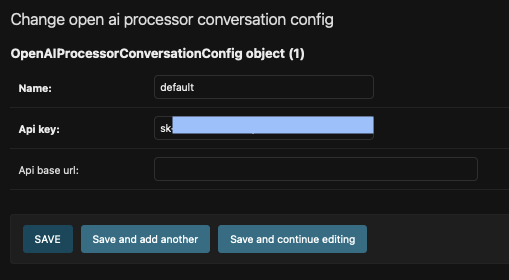

Create a new AI Model Api in the server admin settings.

- Add your OpenAI API key

- Give the configuration a friendly name like OpenAI

- (Optional) Set the API base URL. It is only relevant if you're using another OpenAI-compatible proxy server like Ollama or LMStudio.

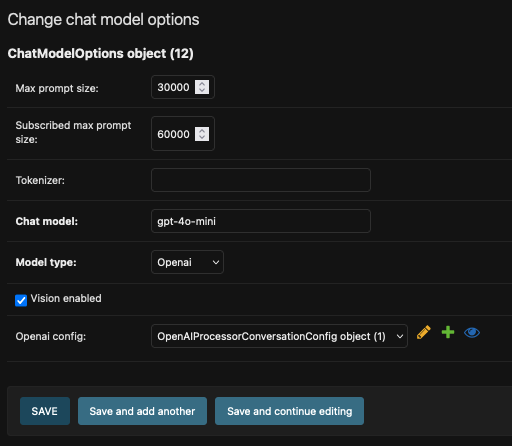

Create a new chat model

- Set the chat-model field to an OpenAI chat model. Example: gpt-4o.

- Make sure to set the model-type field to OpenAI.

- If your model supports vision, set the vision enabled field to true. This is currently only supported for OpenAI models with vision capabilities.

- The tokenizer and max-prompt-size fields are optional. Set them only if you're sure of the tokenizer or token limit for the model you're using. Contact us if you're unsure what to do here.

Multiple Chat Models:Set your preferred default chat model in the Default, Advanced fields of your ServerChatSettings. Khoj uses these chat model for all intermediate steps like intent detection, web search etc.

Chat Model Fields:

- The tokenizer and max-prompt-size fields are optional. Set them only if you're sure of the tokenizer or token limit for the model you're using. This improves context stuffing. Contact us if you're unsure what to do here.

- Only tick the vision enabled field for OpenAI models with vision capabilities like gpt-4o. Vision capabilities in other chat models is not currently utilized.

Sync your Knowledge

- You can chat with your notes and documents using Khoj.

- Khoj can keep your files and folders synced using the Khoj Desktop, Obsidian or Emacs clients.

- Your Notion workspace can be directly synced from the web app.

- You can also just drag and drop specific files you want to chat with on the Web app.

Setup Khoj Clients

The Khoj web app is available by default to chat, search and configure Khoj.

You can also install a Khoj client to easily access it from Obsidian, Emacs, Whatsapp or your OS and keep your documents synced with Khoj.